YouTube has introduced additional safeguards for teens on its website, which includes limiting the content they see that could lead them to form negative beliefs about themselves. As the Google-owned video sharing platform explains, teenagers are more likely to be critical of themselves if they see repeated messages about ideal social standards. In response, YouTube is now limiting repeated recommendations of videos featuring specific fitness levels or body weights, as well as those that display “social aggression in the form of non-contact fights and intimidation” for European users. As The Guardian notes, this rule is already being enforced in the US.

The website said it decided on those video categories after reviewing which ones “may be innocuous as a single video, but could be problematic for some teens if viewed repetitively.” In addition, it has deployed crisis center panels across Europe that will give teens a quick way to connect with live support from recognized crisis service partners. A panel could show up on younger users’ interface if they watch videos related to suicide, self-harm and eating disorders, among other sensitive topics. It could also pop up in their search results if they look for topics linked to specific health crises or emotional distress.

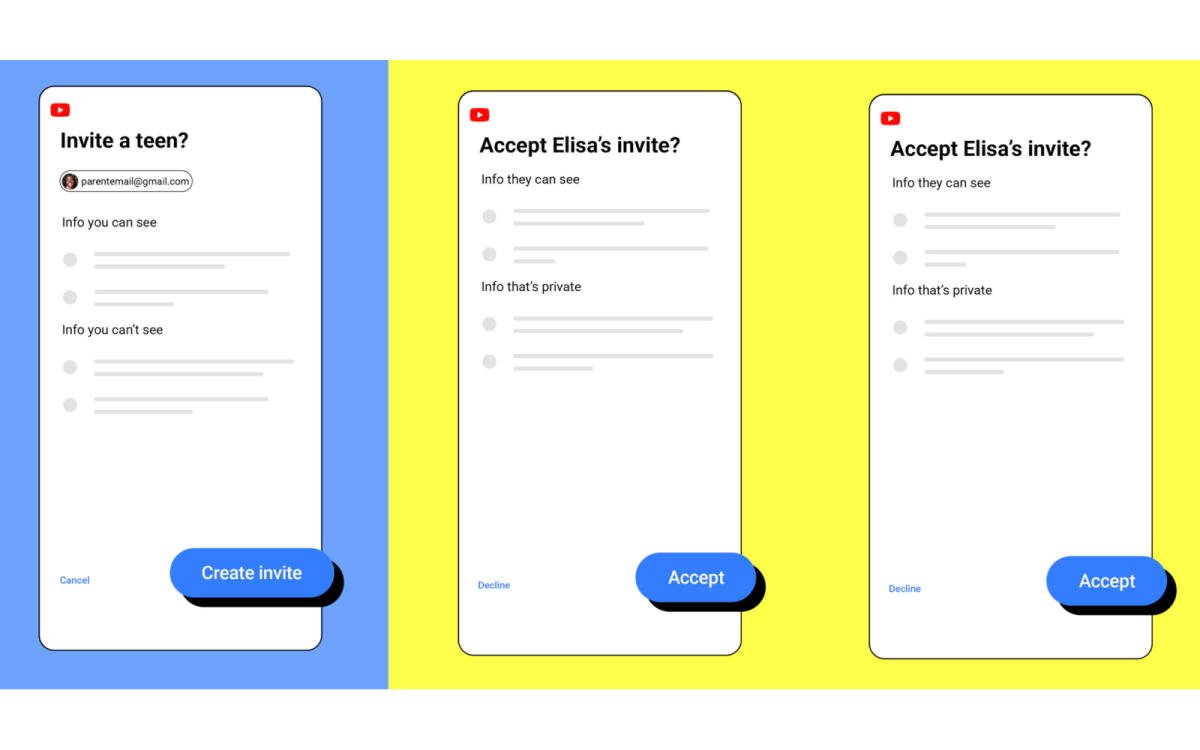

Aside from limiting potentially harmful recommendations, YouTube is adding a new parental control feature that would let parents link their accounts to their teens’ for users in the US and other regions. Parents or guardians will see their child’s channel activity, such as the number of comments, uploads and subscriptions, in the Family Center hub. YouTube will also send them an email if their teen uploads a video or a Short, and if they start a livestream, even if they’re set to private.

The website told TechCrunch, though, that the alerts the parents receive will not include information on the content of comments and uploads. Parents will also not be able to change their kids’ age on their accounts. This feature is a further expansion of the parental controls YouTube introduced in 2021. Back then, the website opened a public beta for supervised accounts that allowed guardians to control the kinds of videos their kids can see.

Recent Comments